Microsoft adds new Designer protections following Taylor Swift deepfake debacle

AI image generators have the potential to spur creativity and revolutionize content creation for the better. However, when misused, they can cause real harm through the spread of misinformation and reputational damage. Microsoft is hoping to prevent further misuse of its generative AI tools through implementing new protections.

Last week, AI-generated deepfakes sexualizing Taylor Swift went viral on Twitter. The images were reportedly shared via 4chan and a Telegram channel where users share AI-generated images of celebrities created with Microsoft Designer.

Also: This new iPhone app fuses AI with web search, saving you time and energy

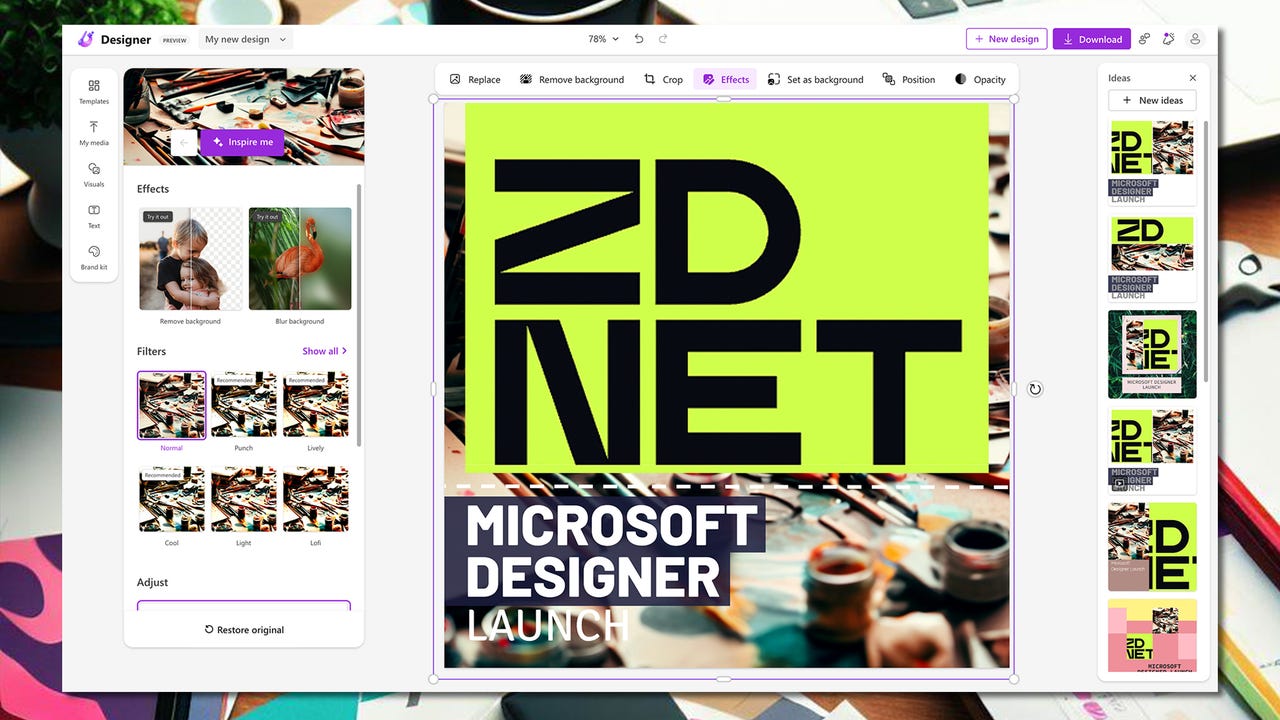

Microsoft Designer is Microsoft's graphic design app that includes Image Creator, the company's AI image generator that leverages DALLE-3 to generate realistic images. The generator had guardrails that prevented inappropriate prompts that explicitly mentioned nudity or public figures.

However, users found loopholes such as misspelling celebrity names and describing images that didn't explicitly use sexual terms but generated the same result, according to the report.

Microsoft has now addressed these loopholes, making it impossible to generate images of celebrities. I tried entering the prompt, "Selena Gomez playing golf," on Image Creator and received an alert that said my prompt was blocked. I also tried misspelling her name and got the same alert.

Also: Microsoft adds Copilot Pro support to iPhone and Android apps

"We are committed to providing a safe and respectful experience for everyone," said a Microsoft spokesperson to ZDNET. "We are continuing to investigate these images and have strengthened our existing safety systems to further prevent our services from being misused to help generate images like them."

In addition, the Microsoft Designer Code of Conduct explicitly prohibits the creation of adult or non-consensual intimate content, and a violation of that policy can result in losing access to the service entirely.

Also: The ethics of generative AI: How we can harness this powerful technology

Some users have already expressed interest in finding a workaround to these new protections in the Telegram channel, according to the report. So, this is likely to be a cat and mouse game between bad actors finding and exploiting loopholes in generative AI tools and the companies behind these tools rushing to fix them for a long time.